The Silence After the Alarm

When I finished writing Eyes Everywhere, I knew something was missing.

Not because the dots hadn’t been connected, but because some of them weren’t visible yet. Others were too faint, still forming under the radar of public discourse. India’s surveillance future wasn’t just evolving — it was splintering, sprawling, and reassembling itself in ways even the most cautious privacy advocates couldn’t fully see.

This isn’t a retrospective. It’s a warning fired into the smoke ahead.

The Aadhaar-Laced Identity Web: From Access to Algorithm

It began, as all grand designs do, with promise. Aadhaar was sold to the public as a key — one that unlocked access to subsidies, banking, mobile services, and eventually, life itself. But the key fits both ways. It also locked citizens into an invisible web of interlinked databases.

A young mother in Nagpur lost her rations when her Aadhaar-linked fingerprint scanner failed. Twice. No override. Her child was marked absent from the public nutrition system. A glitch, they said. But the state’s system had already processed her as fed.

That’s the shift we missed: when identity systems become enforcement tools. Your name becomes a switch. Your fingerprints, a permission slip. Your phone number, a signal flare.

We thought we knew what Aadhaar was for. Now we’re not sure anymore. That loss of certainty — that creeping blur between access and surveillance — is where power quietly takes root.

BharatNet and the Myth of Innocent Infrastructure

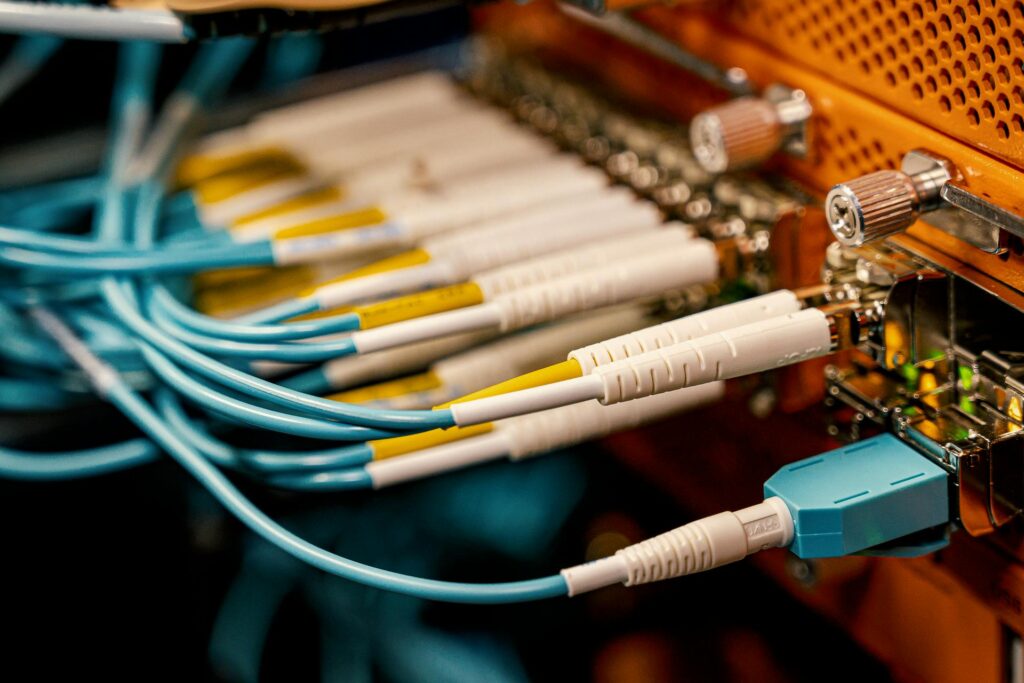

Fiber-optic cables snake across the country now, winding through fields and forests. It’s connectivity for all — or so the headlines say.

But ask the network engineers. Ask the gram panchayat clerks who signed NDAs without understanding what “centralized logging” meant. Ask the villagers whose browsing history is quietly mirrored and stored, aggregated, categorized.

There’s no dramatic villain here. Just quiet cooperation. An architecture being built in the name of empowerment — but owned, monitored, and governed by people you’ll never meet.

What feels like freedom online in rural India may just be pre-filtered access. When choice becomes an illusion, autonomy erodes. And we rarely notice until it’s gone.

The Normalization of Watching

“Safe City” programs launched with noble intentions: protect women, deter crime, modernize policing.

But take a walk through Hyderabad’s old city after dark. The streets are dotted with black-glass domes. Above chai stalls and butcher shops. Watching.

Facial recognition trials are active. You don’t see them unless you look hard. And even then, they don’t blink.

One 19-year-old was stopped because his facial match history flagged him as “potentially suspicious” — he’d attended three separate protests in 2024. Not illegal. Just… watched. Flagged. Logged.

When cities treat citizens like suspects, the social glue dissolves. Trust thins out. You feel less like part of the city, more like something it’s trying to manage.

Predictive Policing and the Logic of Pre-Crime

“Why wait for a crime when you can prevent it?”

That’s the line from one of India’s emerging predictive policing startups. Their software pulls from FIR records, CCTV patterns, even mobile data movement. Police officers now carry heatmaps — red zones, yellow warnings.

But prediction is just judgment dressed up in code. And in India, where caste, religion, and class already shape bias, algorithms only reinforce the old walls.

A Dalit teenager in Lucknow was detained four times in six months. Not charged. Just… “on the watchlist.” His phone calls triggered alerts. His location data intersected too often with “crime-prone” areas.

The moment you feel you’re being judged by a system you can’t see, that you can’t challenge, fairness evaporates. The rules feel rigged. And justice becomes a ghost.

The Unseen Consequences of Digital Health

The Ayushman Bharat Digital Mission was another promise: seamless, patient-first healthcare. But centralizing health data also centralizes power.

Insurers are experimenting with behavior-based pricing. Startups analyze wearable data to “incentivize” fitness. Employers ask for voluntary health disclosures — that quietly become policy.

A Bengaluru techie found her mental health prescription flagged during an insurance review. She wasn’t denied coverage. But the premium doubled. “It’s a risk model,” the insurer said.

Health should be private, sacred, unjudged. But when your data becomes a liability, your social standing shifts — not based on who you are, but how you’re scored.

This Isn’t Inevitable. It’s Designed.

The future isn’t a straight line. It’s a series of decisions disguised as progress.

India’s surveillance future won’t arrive in one dramatic swoop. It will tiptoe in through telecom portals, court filings, software contracts, and policy updates. It will wear the mask of safety, convenience, and empowerment.

But remember this: Every system is designed by someone — and for someone.

What’s missing from Eyes Everywhere isn’t more fear. It’s more agency. The idea that we can still shape the curve, still say “enough,” still demand oversight, audits, and accountability.

The systems watching us do not yet define us.

Let’s make sure they never do.